Author: Jurij Mihelič, Beyond Semiconductor

Secure-by-formal-design: Towards better software and hardware assurance

Developing and building a cross-platform open security stack for connected devices is a demanding endeavor necessitating expertise in various fields to solve challenges on multiple layers of the hardware and software stack (or stick, as put into a nice metaphor in one of the previous blog posts).

In the ever-evolving digital landscape, there's a growing need to provide and improve security in newly developed as well as existing computer systems. These are inherently complex challenges as the system’s security vulnerabilities, adversary approaches, and attack techniques only improve over time. As a well-known security specialist once said: Attacks always get better, they never get worse.

Secure-by-design

This is where the Secure-by-design approach comes into play, which is similar as correct-by-design, but with a foucs on the security. Building security into the design phase as a core business requirement, rather than addressing it as an afterthought, can help in creating more robust systems. In this approach, security is considered and built into the system at every layer and starts with a robust architecture design in order to provide solutions for requirements such as authentication authorization, confidentiality, integrity, availability, non-repudiation and others.

Complementing the secure-by-design approach is also the secure-by-default approach with a focus on the idea that products are secure to use “out of the box”, requiring little to no configuration changes necessary. It is often assumed that secure-by-design subsumes the secure-be-default.

Throughout the history and practice of hardware and software development, various design patterns, practices, and guidelines have already been established to support the design of secure systems. Several of them have become well-known, such as:

- Open design: Assume protection mechanisms, techniques, and algorithms are known to attackers; do not rely on security through obscurity.

- Principle of least privilege: Subjects should have minimal privileges needed to complete their legitimate tasks.

- Secure default privileges: Attackers won’t complain if they gain illegitimate access, but legitimate users will if their access is denied.

- Separation of privilege: System access rights should be distributed among multiple subjects.

- Continuous access control: Perform authorization for every resource access.

- User-friendly security scheme: Complex security schemes will be ignored or exploited by users, while overly simple schemes will likely be ineffective.

Nevertheless, the secure-by-design approach, as described by the Cybersecurity and Infrastructure Security Agency, goes much further and broader by establishing three general principles:

- Take ownership of customer security outcomes and evolve products accordingly. The burden of security should not fall solely on the customers.

- Embrace radical transparency and accountability. Manufacturers should distinguish themselves from the rest of the community in their ability to deliver safe and secure products. This may involve sharing information and updating CVE records, among others.

- Build organizational structure and leadership to achieve these goals. Besides technical subjects, focus also on the executive layers of an organization as they are primary decision-makers and need to prioritize security as a critical element of product development.

We encourage the curious reader to look more in-depth at the CISA’s secure-by-design document.

Formal approaches

The proactive Secure-by-Design approach may further be complemented by the use of formal methods. These are mathematical techniques that allow us to specify and verify the entire system, leaving no stone unturned in our pursuit of security.

Recent advancements, such as the security of the seL4 operating system and DARPA’s Little Bird helicopter, illustrate the potential of formal methods to transform high-assurance software design. Nevertheless, the use of formal methods has traditionally been considered an arduous and costly practice. For example, the cost of seL4 verification has been estimated to be $440 per line of code for performing safety and security proofs. At the same time, this is still low in comparison to the rule-of-thumb $1000 per line of code estimate to achieve Common Criteria EAL6.

For these reasons, a plethora of formal approaches exist, spanning from lightweight formal methods such as some forms of model checking, Alloy object modeling, Z notation etc. to more demanding ones such as formal verification comprising of formal proofs often performed with proof assistant software such as Isabelle/HOL, Coq etc.

CROSSCON formal framework

One of the CROSSCON project's objectives is to provide IoT stakeholders with methodology, techniques, and related tools to formally verify "correct by design" secure open-source software and firmware for connected devices. Several of our efforts, tasks, and deliverables are focused on this objective.

Within the WP2 Design Specification and Assurance for IoT work package we provide two fromalization-related deliverables (currently in draft form):

- D2.1 CROSSCON Open Specification describing a high-level architecture of the CROSSCON Stack, its components, trusted services, and hardware/software co-design of CROSSCON SoC.

- D2.2 CROSSCON Formal Framework describing the formalization of the CROSSCON Stack and mainly based on the results provided within the specification.

In the CROSSCON stack formalization, we adhere to a variant of the seL4 security properties: confidentiality, integrity, and availability. These properties are all also captured within the CIA triad, an established model designed to guide security modeling.

Separation kernel formal model

Separation kernel is a low-level system component that segregates a system into isolated domains. The importance of this segregation cannot be overstated — it ensures that a compromise in one section doesn't impact the others.

Nevertheless, a separtion kernel model is an abstraction which may exhibit in various possible refinements. For example, within the CROSSCON project our intent is to use the model to describe the separation properties of the CROSSCON Hypervisor as well as Baremetal TEE.

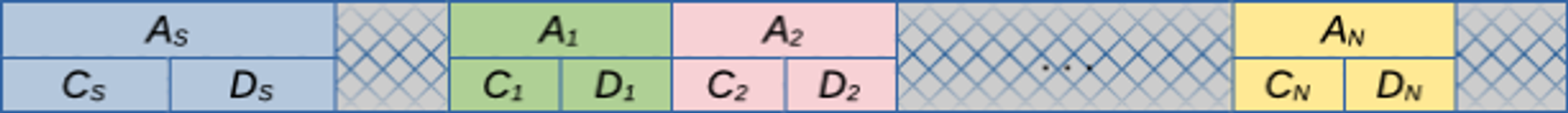

To do this, our model also abstracts several features provided by the underlaying hardware, such as unprivileged and privileged mode of instruction execution, memory and memory isolation properties (as represented in the figure below). Based on this and three basic operations, i.e., memory read, memory write, and instruction fetch, we provide a framework for defining the semantics of machine instructions.

As as result, the instructions properly defined within our framework, exhibit several security properties:

- Integrity: an object cannot be altered by a non-authorized subjects. Moreover, we separately show confidentiality for various memory regions such as domain data, domain code, kernel data, kernel code etc.

- Confidentiality: an object cannot be accessed by non-authorized subjects. Similarly, as for integrity, we show confidentiality for particular memory regions.

- Availability: each domain has access to its own resources.

In order to provide versatile separation kernel model, many challenges have to be addressed. These include supporting a switch of execution context to provide multitasking environment, interrupt handling without information interference, memory sharing between domains, supervised kernel calls etc. While we have already implemented some of these features and described them in our deliverable (D2.2), others are still being worked on.

Information flow paradigm

The separation kernel model is actually a special case of a more broader information flow tracking and monitoring (IFTAM) paradigm. Here, the intent is to track the flow of information within a system and possibly limiting it when it is crossing the system’s boundaries. In layman's terms, it's about keeping a watchful eye on where your data is going and who's using it. We have already discussed this topic in its generality in our previous blog posts.

In security, the IFTAM paradigm is pervasive in various forms. Here, we also see its connection to our research in the WP2 & WP4 tasks on the Perimeter Guard hardware module that is used to monitor the boundary of the secure computing system with a goal to preserve isolation guarantees between domains when using shared hardware modules (e.g., cryptographic accelerator, neural processing unit, or I/O devices). Obviously, this is a complex task due to the functional diversity present in hardware modules.

Furthermore, we also see the usefulness of IFTAM concepts in our other effort focused on the information separation in Secure FPGA Provisioning, where the goal is to partition physical FPGA (field-programmable gate array) into multiple virtual FPGAs for efficient resource utilization and enhanced security.

Finally, our ulimate goal is to design a methodology and technologies that would allow tracking and enforcing information flows within the main processing unit as well as supplemental hardware modules. To achieve this, we are developing an approach, called data-driven secure computing (D2SC), which enables the processor developers to augment new or existing processing units to support dynamic information flow tracking for traditional information processing instructions.

Conclusion

Creating secure systems is not a one-time task but an ongoing process. It involves designing systems with security in mind and regularly updating them to keep pace with emerging threats. Remember, security is a journey, not a destination. Stay safe & secure!