Authors:

Shaza Zeitouni, Postdoctoral Researcher at TU Darmstadt

Huimin Li, PhD Student at TU Delft

Field Programmable Gate Arrays (FPGAs) represent a category of integrated circuits (ICs) that users can electrically program or configure to execute diverse digital circuits. Owing to their attributes, including parallel processing capabilities, support for various data types, minimal latency, and superior energy efficiency compared to standard computing platforms, FPGAs are considered powerful tools for expediting computations and tackling intricate challenges across multiple fields. The consistent advancements in FPGA technology have given rise to System-on-Chip (SoC) FPGAs, merging FPGA’s programmable logic within the same chip as processors and processing resources. SoC FPGAs enable custom accelerators, boosting software performance and system efficacy. Moreover, FPGAs find deployment in commercial cloud platforms—referred to as cloud FPGAs—such as Amazon EC2 F1, Microsoft Azure Catapult, and Alibaba Cloud F3, underscoring their pivotal role in the present technological landscape.

Like other computing resources, FPGAs are vulnerable to various security threats, such as unauthorized access and data leakage. In addition to typical security concerns of general-purpose computing resources, FPGAs are also prone to Intellectual Property (IP) theft. The IP represents the hardware-designed accelerator implementing a specific algorithm intended for programming and execution on the FPGA. Leading FPGA manufacturers like Intel and AMD Xilinx have integrated hardened cryptographic cores into their FPGAs to ensure robust protection of IP designs' confidentiality and integrity.

Trusted Execution Environments (TEEs) on FPGAs

In conventional FPGA usage, users possess physical access to their FPGAs, enabling them to securely program cryptographic keys before deployment in dedicated, secure facilities. However, in cloud deployment models, where clients lack direct physical access to their rented FPGAs, ensuring IP protection necessitates the involvement of trusted third parties or FPGA vendors. This scenario draws parallels to Trusted Execution Environments (TEEs) in a CPU environment, where clients rely on certificates issued by the CPU manufacturer.

Establishing TEEs on FPGAs relies on two primary strategies, contingent on the FPGA environment. SoC FPGAs can benefit from the built-in TEEs on hardened processors, such as ARM TrustZone. The built-in TEE can be leveraged to securely configure the FPGA, thereby ensuring its inherent trustworthiness.

Contrarily, FPGAs lacking explicit TEEs on the hardened processors necessitate a different approach. In this scenario, a trusted shell, acting as a trust anchor, becomes imperative. This entity is responsible for remote secret key generation, secure configuration, isolation, and executing cryptographic operations for data. To establish trusted execution, the trust anchor must be safeguarded, provided by the FPGA vendor, and loaded onto the FPGA before the client's design.

Remote users can attest the FPGA configuration, verifying the authenticity and integrity of its design. This significant shift towards incorporating trusted execution within cloud-based FPGAs offers diverse advantages. It grants organizations a heightened level of control over their applications and data security, even in scenarios where direct physical access to the FPGA is limited or unavailable.

To differentiate them from TEEs implemented on CPUs, TEEs on FPGAs are referred to as FPGA-based TEEs. This paradigm underscores the transformative role of FPGAs in augmenting processing capabilities and fortifying applications against potential threats within cloud environments.

Federated Learning: A USE-CASE of FPGA-based TEEs

The core concept of Federated Learning (FL) involves multiple clients collaborating to train a global model without sharing their datasets. During each training round, each client utilizes its local dataset to update the global model it has received and then transmits the newly trained local model to the server. The server aggregates these models from different clients to create an updated global model, which is distributed back to the clients. Various aggregation methods are utilized in FL, with federated averaging emerging as the most popular approach. In this method, the central server collects model updates from all clients and computes their average.

However, the model's integrity in FL is vulnerable to poisoning attacks, which seek to manipulate the global model to exhibit undesired behaviors. Adversaries may employ techniques such as data poisoning, involving the injection of biased or manipulated samples into the training data, or model poisoning, which consists of modifying the model's parameters or structure during training to manipulate its behavior. These poisoning attacks can be categorized as untargeted attacks aimed at reducing the accuracy or usefulness of the trained model or targeted attacks, also known as backdoor attacks, which manipulate the global model to exhibit specific misbehaviors aligned with the attacker's objectives. In addition to poisoning attacks, privacy attacks, also known as inference attacks, pose a threat by attempting to extract sensitive information from the training data used in a machine learning model. These attacks can take various forms, including inferring the presence of specific samples in the training data or reconstructing individual samples from the training dataset.

Existing defenses for FL typically focus on safeguarding client models from potentially malicious servers or preventing specific backdoor attacks initiated by malicious clients. However, addressing both types of attacks simultaneously presents a dilemma. On the one hand, identifying and filtering poisoned models requires the server to inspect model updates, potentially compromising client privacy. On the other hand, protecting client privacy necessitates preventing the server from accessing local models. Privacy-preserving methods such as Homomorphic Encryption (HE) or Secure Multi-Party Computation (SMPC) offer potential solutions. Still, they can significantly slow down the aggregation process, particularly for complex backdoor defenses utilizing vector metric computations or clustering. One potential solution is using CPU-based TEEs to protect the privacy of local models while allowing the aggregator to inspect them. Nevertheless, the limited computational capacity of TEEs introduces significant overhead for specific computation-intensive algorithms, such as those involving calculating Euclidean distances between local models. Herein lies the potential for FPGA-based TEEs to provide a sensible approach to ensuring the privacy and security of FL.

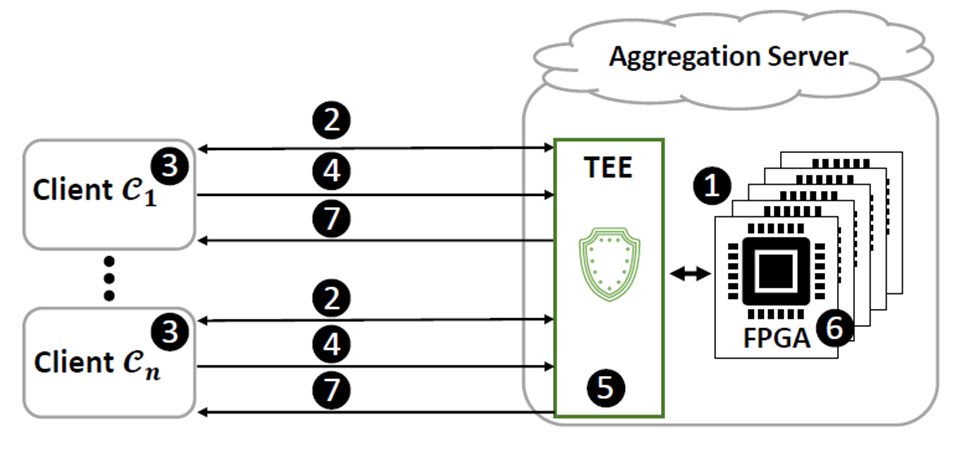

The figure above shows a framework that leverages FPGA-based TEEs for the FL aggregation process. By utilizing FPGA resources, performance bottlenecks associated with software-only solutions can be efficiently addressed while mitigating backdoor and inference attacks. The workflow of FLAIRS involves several key steps:

- Establishing a TEE on FPGA to securely process clients' models.

- Attesting the TEE, where clients verify the integrity and authenticity of the FPGA configurations involved in processing their models.

- Clients encrypt their models using individual secret session keys exchanged with the TEE.

- Transmission of the encrypted models from the clients to the aggregator.

- Storage of the models in memory, ready for aggregation.

- Aggregation process.

- Distribution of the aggregated model back to all clients.

Conclusion

FPGA-based TEEs present a transformative solution, redefining the landscape of security in cloud-based environments, notably in fields like Federated Learning (FL). The distinctive advantage lies in their ability to harmonize robust security measures with computational efficiency. By seamlessly integrating secure configuration capabilities within Field Programmable Gate Arrays, FPGA-based TEEs empower users or clients to safeguard their sensitive data and workloads without compromising computational performance. Their potential to strike a balance between privacy and computation heralds a new era in addressing the intricate security challenges faced by cloud deployments, offering a promising pathway towards efficient and resilient systems in the evolving tech realm.